Computer Consciousness?

Last month I gave a public talk at the Salon Club in London about computer consciousness, as part of a lively evening of talks exploring on the ways that artificial intelligence (AI) is increasingly encroaching on our lives. One key question that served as a foundation for the evening’s discussion was the main “transhumanist” goal: when will we be able to upload our consciousness into a computer?

Indeed, there is a prominent organization, the 2045 Initiative, whose intent appears to hasten the moment when human consciousness can live on indefinitely in a non-biological substrate, with the realistic (they believe) aim to achieve this milestone by 2045.

At around the same time, AlphaGo was busy thrashing the world champion at Go, seemingly bringing the moment of truly aware, clever AI ever closer. In a flip question to the transhumanist one, does this mean that the time we are all doomed to be destroyed by our AI overlords is just around the corner?

It is easy, especially if you fear your own mortality, to be caught up in the hype and optimism of such endeavours. It is also easy to resort to paranoia, especially when prominent scientists (in other fields), such as Stephen Hawking, proclaim doom-laden statements like:

“The development of full artificial intelligence could spell the end of the human race.“

But I want to devote this blogpost to explaining why 2045, and by extension the rise of our homicidal AI overlords, from a neuroscientific perspective, is fantasy. I’m a hopeful guy, and do believe in the ingenuity of the human mind, as borne out by so many achievements in the history of science, to overcome almost all obstacles. But even given this, I still see this key transhumanist mark, if it is ever possible, as being centuries away.

In terms of AlphaGo, if Lee Sedol, the Go world champion it roundly beat, were to say a few meaningful words, or do a dance, or recognise a dog in the street, or learn a new game that afternoon, these would all have been trivial achievements for the generalist human, but impossible for the specialist program. AlphaGo is a large leap forward in machine learning, but it is based on terribly crude algorithms compared to the human brain, and there are thousands of kilometres to go yet.

Let me start by summarising the most intricate feat of evolution in its four billion year history, and the most complex object in the known universe: your brain.

The Daunting Details of the Task

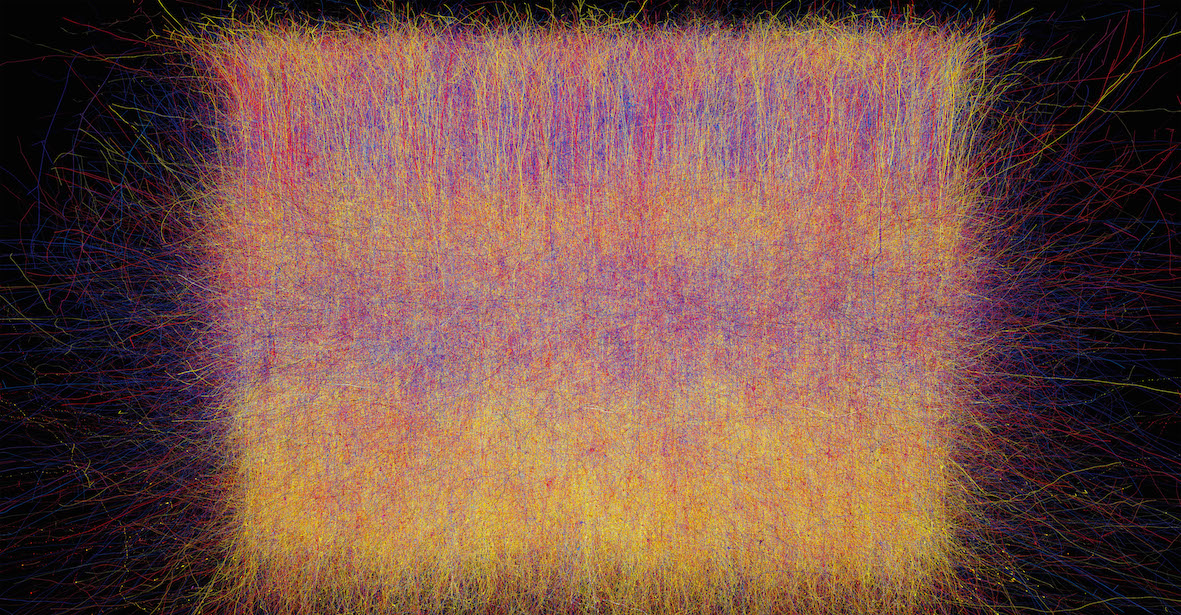

First off, anyone who doesn’t tell you that the task of digitally capturing a human brain is utterly mind-boggling is laughably wrong. The human brain has around 85 billion neurons, each connected to 7000 others, leading to about 600 trillion connections in all. Inside each of your skulls, this cabling connecting neurons together is 165,000km long, enough to wrap around the world four times over! But that’s just the tip of the iceberg. The different types of neurons is counted in the hundreds, but may be as much as 1000 – each kind performing a subtly different computational role. There are at least as many different types of synapses – the connections between neurons that allows information to pass between them.

Then there are the glia cells, equal in numbers to the neurons, of about 85 billion. It used to be assumed that these provide a supporting role only – providing structure, nutrients, carry out repairs and so on. Increasing evidence is emerging, however, that these glia cells play an information processing role as well, although exactly what computation purpose they serve is still an open question.

If this weren’t overwhelmingly daunting enough, there is an entire further level of computational complexity often overlooked. All of the above assumes that computations are limited to between cells. But this assumption is utterly false. Inside almost every single biological cell, an incredibly complex set of computations are occurring at the interaction between the DNA, RNA and proteins – with cascades of such switches being turned on or off to capture some key information, or enable or disable certain cellular machinery. So inside every single neural or glial cell, key information processing steps are undoubtedly going on as well. Some of these computations might not be so relevant to the representation of our minds. They might instead just be looking after the general upkeep of the cell. But some low level computational processes inside these so-called support cells might be critical to who we are, and how we think and feel. And this extra layer ups the complexity stakes – and the difficulty of the transhumanist task – by orders of magnitude.

From all this, we do have some ideas for the computational processes involved, especially in small, discrete problems. But our knowledge of how the brain computes information is very very far from complete.

Current Progress

So with all this in mind, what is the current state of play in us capturing the human brain in all it’s incredible detail?

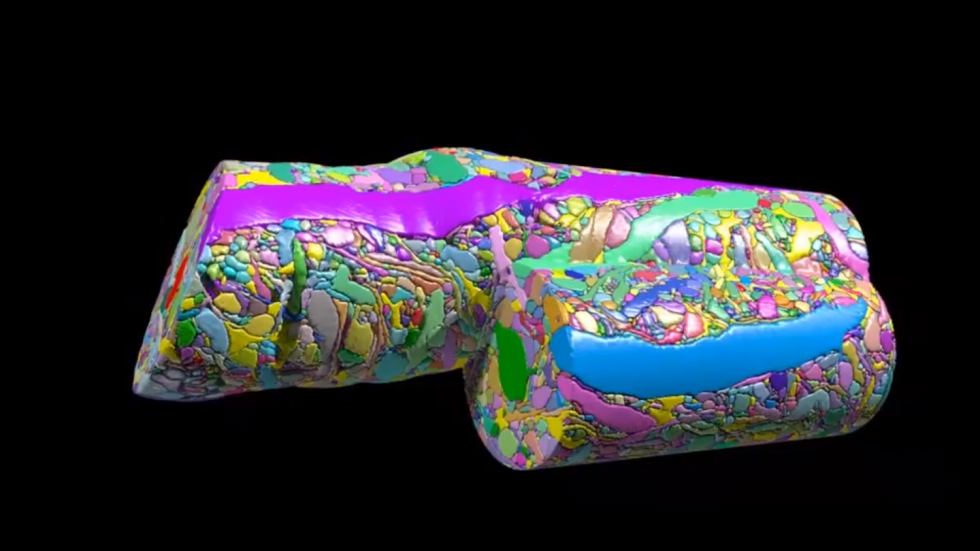

First, the anatomy. The current state of the art in trying to capture a brain involves a large group led by Jeff Lichtman, from Harvard University. With work spanning 6 years, and published in Cell last year (with a great video and summary in Nature), they have taken incredibly thin slices of the mouse brain, and then used an electron microscope to capture them in cellular detail, before digitally reconstructing them into a 3D whole. The result is a dust-sized speck of cortex, not even comprising an entire single neuron.

But nevertheless, within this dense web of neural cables, there are 1700 synapses, and 100,000 other important microscopic structures. It is monumental work, but to do the same job for the entire human brain would involve a zettabyte of information – the current total digital content on the planet. And that’s not the only problem. This is capturing a dead brain, slice by slice, in cellular detail. To really grab a person’s consciousness, we’d need to upgrade this process on multiple levels. We’d need it to be molecular, not cellular, to grab the computations inside cells; we’d need it to be carried out on a live human, and we’d need it to be done fast. A scan that takes years would mean you effectively have a different person at the end compared to the beginning. There is no technology available, or in the remotest pipeline, that can scan like this. And, perhaps, there never will be.

So what about making computational models of the human brain, working from the other end of the problem. Obviously this will include a lot of guesswork if we haven’t sorted out the basic understanding as yet of what’s actually occurring in the brain. But leaving this aside for the moment, what’s the most ambitious project out there?

The Blue Brain Project is a billion Euro consortium with the aim of creating a digital brain. But after over a decade’s work, the latest achievement, published in Cell last year, is a computer model of a cortical column of just 31,000 rat neurons, and 40 million connections.

This is about a 7000th of a rat’s brain (which has 200 million neurons), and a human’s brain is 400 times larger than a rat’s, so this model is about 3 million times smaller than a human brain. On top of that, this project has many simplifications. It includes only 55 different neuron types, doesn’t model glia cells, or blood vessels, and doesn’t model any of the cellular machinery inside each cell.

The Blue Brain Project is, therefore, a vast distance from making a digital human.

Future Projects

Perhaps the most exciting imminent project, which will attempt to connect the anatomical and computational wings together, is one funded by the US Intelligence Advanced Research Projects Activity (IARPA), the intelligence community’s equivalent of DARPA. They have provided $100 million to the Machine Intelligence from Cortical Networks (MICrONS) program, which has just launched. From the anatomical perspective, and in collaboration with the Allen Institute, of which the prominent neuroscientist, Christoff Koch, is chief scientific officer, as well as Lichtman’s group in Harvard, MICrONS aims to capture an entire cubic mm of mouse brain to the same detail as the speck of cortex shown above (though 600,000 times larger). This will include some 100,000 neurons, around 10 million synapses – equating to about 4km worth of wires. All in a lump of brain about the size of a poppy seed. This is just one of a set of highly ambitious neuroanatomical projects attached to MICrONS.

But that is only half the MICrONS story. The other half is to infer the information processing that occurs from this mountain of brain data, and come up with the kinds of neural algorithms that allow mammals to learn and perceive so effortlessly. This way, it is hoped, far more sophisticated, general purpose, and powerful computer simulations of biological data crunching than the AlphaGo program will emerge.

This is an enormously high risk and incredibly ambitious project, with a huge budget. And yet, involving just a mm of the mouse brain, which is less than a millionth the volume of our brains, it is still utterly miniscule compared to the vast scales and complexities of the organ that generates our personality, memories, and mental identity.

Computer Consciousness? Don’t hold your breath.

I love life, always have. And I don’t want it to end. We’ve already collected more books on our shelves than I can read in a standard lifetime. There is so much I want to learn, to explore, to experience. And the idea that I could live on, by uploading my brain into a robot in the next few decades, is immensely appealing. Sadly, though, such forecasts completely fail to take into account the unimaginable complexity of the human brain, and our infancy in attempt to capture and understand it. 2045 sounds, to me, a completely naïve guess. 2345, maybe. But even then, I wouldn’t hold my breath.

4 comments

Skip to comment form

Well, you make an assumption here that is not explicitly told in the text. You seem to assume that the way to implement one´s consiousness in a lets say silicion from is by whole-brain emulation. That might not be true. It might be that their is a small subset of regions in the brain that are sufficient. Or it might be that the cognitive architecture, i.e. the software is isomorphic with the mind of the person who´s consciousness is to be uploaded.

This is what Ben Goertzel is trying to do with OpenCog, although I don´t think that he is currently working on mind-uploading, rather AGI.

On another note, you could still argue that the copy would not be you, in the sense that if you still lived after the scan was done, there would now be 2 of you.

Author

Hi Benjamin,

Thanks for the comment. You’re right that it’s possible that the entire brain may not be needed to recreate a person in silicon form. This is an open question.

There is also the issue of how accurately you want to capture a person. It might be that you can capture 90% of a person’s character with 10% of the neurons. But would anyone settle for that. Would that be like 10% death immediately?

My intuition is that to fully capture a person, you probably do need all the brain, and maybe even neurons in the rest of the body too (the complex of neurons in the gut too, for instance). But I’d be amazed if you don’t need at least the entire cortex. So we are still talking about billions of neurons, and the technological barriers for this are almost as bad as for the entire brain.

Yeah, I see your point. I guess that it all comes down to computational resources, and as of to day we are still far off from the temporal and spatial resolution needed to satisfy the constraints for a realistic scan of a “person”.

I want to point out that what I meant is that it might not even be needed to simulate any neurons at all, in the sense that we don´t know at what level is the correct one for capturing a given person P’s “essence” so to speak. It might be that what matters is the computations that are being performed, and just because they are implemented by neurons in the brain doesn´t mean that it is the only way to go.

But I think that one crucial thing, that some AGI developers seem to overlook, is the role of body-perception in cognition. I might be biased, as I have doing some research at Ehrssons Lab, but I think that for an AGI to approximate human-like consciousness, it would have to have som sense of body-ownership and agency. Whether you actually need a physical implementation of this body or if you can simulate it, I don´t know.

But yeah, I agree, as of now we can only speculate, but it will be highly interesting to see how it all turns out.

Best,

Ben

Great article to understand current AI status. I would like to show a new concept of consciousness mechanism.

Starting thinking of consciousness mechanism, a key is re-thinking of Chalmars’ hard problem. While thinking, a new concept: unit qualia should be thought of.

Complicated qualia, ex: ‘sense of touch of the throat which can feel drinking beer,’ aren’t unit qualia. Those are based on many unit qualia. And those are also based on association each other of other qualia. Those association are lead by synaptic connections.

For example, ‘blue LED with no emotion’ is an unit qualia, and a start point of the new consciousness mechanism: http://mambo-bab.hatenadiary.jp/entry/2014/12/09/005711